Three ways to get three colors using one or three CCDs

Written August 2000. A version of this article appeared in DV Magazine, December 2000. © 2000-2004 Adam J. Wilt

In our discussions so far we haven't talked about color and how it's produced in CCD cameras. It's time to address that omission, and look at some of the artifacts and pathologies of the methods used.

CCDs themselves aren't sensitive to color; a CCD is a monochrome device sensing changes in brightness (to be perfectly pedantic, CCDs do have a spectral sensitivity, and it's more heavily weighted towards infrared than it is towards blue, but nonetheless a CCD by itself can't discriminate color). Color information is obtained by putting colored filters between the CCD and the subject; the filtration can be on a per-chip basis, as is done in 3-chip cameras, or on a per-pixel basis, in 1-chip cameras.

Three Chips On a Block

The easiest way to build a color camera, conceptually at least, is to use 3 CCDs, one each for red, green, and blue colors. A beamsplitting prism block behind the lens breaks the image into three identical copies (Figure 1), and passes them off to three CCDs through filters of the three primary colors. The chips are hard-mounted to the prism in perfect register, so that the images on all three are directly superimposed (unless pixel-shift is being used, in which case the green CCD is physically offset half a pixel horizontally).

Each CCD receives the entire image; full-bandwidth R, G, and B signals make color processing simple. Each CCD can be optimized for the color it will "see", by chemical doping to alter its spectral sensitivity, for example. Adjusting the color balance of the overall signal is a simple matter of adjusting the gains and black levels of the three chips relative to one another.

Three chip cameras yield the highest quality pictures, so this configuration is used in the best quality cameras. Of course, three chips cost a lot more than one chip, and CCD chips are still the most expensive single electronic component in cameras, so 3-CCD cameras are correspondingly pricey.

The prism block itself is bulky (at least compared to no prism block), and it enforces a large physical separation between the image plane of the chip and the back of the lens. This separation (the "flange back" distance) forces lens designers to employ more complex, costly retrofocal designs in the wide-angle part of a zoom lens' range. This is one reason why superwide zooms for 3-chip cameras are both rare and expensive, and why the zooms on most cameras are much more generous at the telephoto end than they are at the wide end.

One Chip, Off the Block

Single-chip cameras can be both cheaper and more compact, but they pay the price in increased processing complexity and artifacting. Since there's only one chip to make an image, that single chip has to perform color discrimination itself, which it does through an array or "mosaic" of color filters on the surface of the chip itself.

Video cameras usually use one of two different mosaic patterns using a 2x2-pixel repeating "cell". Both rely on interpolation in both the horizontal and vertical directions to derive the luma (Y) and chroma (R-Y, B-Y) signals. This is not as horrible as it sounds; remember dual-row readout ("Fields and Frames...," DV Magazine, September 2000)? Since each resulting video scanline is already the summation of two rows of pixels, one-chip cameras will merge pixel values in the two rows used for an output scanline, so there's not really any loss of vertical resolution from this process, at least for the luma signal.

Horizontally, though, adjacent pixels need to be combined to derive the full set of color values needed for processing, so you'll typically find that single-chip cameras have higher pixel counts than their three-chip brethren (and sistren?). A general rule of thumb is that a one-chip needs a bit less than twice the CCD pixel count of a three-chip for a comparable resolution; that's not precisely true, depending on how you run the numbers, but it's a good rough guess.

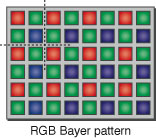

The primary color RGB Bayer pattern (Figure 2) has excellent colorimetry. Derivation of Y, R-Y, and B-Y signals is straightforward; in ITU-R BT.601-5 colorimetry (the colorimetry of standard-definition component digital formats) for example:

| Y = .299 R + .587 G + .114 B |

| R-Y = .701 R - .587 G - .114 B |

| B-Y = -.299 R - .587 G + .886 B |

These equations may be realized in analog as resistor networks, or may be computed digitally in a DSP, depending on the camera.

The 2x2-pixel cell of the Bayer pattern uses two green pixels, but only one red and one blue. Kodak researcher Bryce Bayer, for whom the pattern is named, noted that the human eye is about twice as sensitive to green as to other colors (as the coefficients in the 601 equations imply), so he assigned two pixels to green for the most accurate pickup of that color.

Oh, What Complementary Colors!

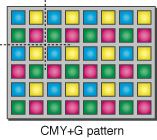

Some designs use a complementary color CMY+G pattern instead (Figure 3). The dye layers used for the cyan, magenta, and yellow filters tend to be more efficient than RGB dyes; they pass more of the light to be filtered while blocking more of the light to be excluded. As a result chips using CMY+G filters tend to be almost twice as sensitive as chips using RGB filters; low-light performance is improved and the noise at any light level is reduced. Given the tiny pixels needed for good resolution, and the fact that signal summation across three chips isn't available to maximize signal-to-noise rations, anything that minimizes noise is a good thing.

Green of course, is a primary color, not a complementary color, and it can be derived from simple math using the CMY values; why add this redundant green color to a CMY cell? Remember that we're most sensitive to green detail; having a primary green pixel in the cell improves resolution in that important signal. Also observe that the "cells" alternate arrangements: the 2x2 cells on pixel rows 1 and 2 place yellow over magenta and cyan over green; on rows 3 and 4 the arrangement is yellow over green and cyan over magenta. On some chips dual-row readout pixel merging is done on-chip, yet you can still obtain the needed Y, R-Y, and B-Y signals - or passable facsimilies thereof - with simple addition and subtraction of adjacent CMY+G cells. If you really want the gory details, check out the spec sheets for any of the interlaced color CCDs at http://products.sel.sony.com/semi/ccd.html.

Other color cell combinations are possible, such as a CMY Bayer pattern with two yellow pixels. For the most part, though, these are found in digital still cameras where pixel interpolation happens over more scanlines, often in non-real-time.

In either the RGB or CMY+G cases, as long as image detail is larger than the individual pixels, one-chip cameras make very nice looking pictures. As fine detail approaches the size of the pixels, though, it illuminates only some of the pixels in each cell, and color fringing or color moiré artifacts are likely to occur, even after "de-Bayerizing" the image (see Peter Cockerell's website for details). Because the moiré shifts as the image moves, it can be quite noticeable and distracting at times whenever shooting detailed subjects. Such artifacts are usually the giveaway that an image was shot with a one-chip camera, although three-chip cameras combining pixel-shift with insufficient optical low-pass filtering are also subject to fringing and moiré.

The staggered, checkerboard arrangement of the different colored pixels does a good job at minimizing moiré, but often some sneaks through, especially on pathological images like test charts. Different patterns offer different tradeoffs; there are patterns that perfom better on some test images, but in general some form of 2x2 Bayer-like pattern is the most practical choice.

When watching a composite analog feed, the moiré from one-chip cameras or pixel-shifting techniques is often swamped in the cross-color artifacts of composite video: the shimmering patterns of green and magenta atop fine detail in NTSC video, or the orange and blue moiré seen on PAL broadcasts. It's an issue in image processing and compositing, though, and any picture degradation contributes to the final image quality or lack thereof, so it's something to be aware of. And, as we are moving to a digital component future, both in DVD and DTV distribution, color fringing and moiré are likely to become more noticeable as factors in picture quality.

Still Questions?

You might be wondering why expensive digital still cameras are almost entirely single-chip affairs, yet high-end video cameras are three-chip jobs. Why not use three chips in still cameras for best quality?

Still cameras are more space-constrained than video cameras; digicams compete with 35mm and APS film cameras with compact bodies. A prism-equipped still camera isn't a good candidate for pocket or purse, or slinging around one's neck on a strap.

At the high end, the flange back limitations of prism-based three-chippers precludes the use of standard still camera lenses, even those that are built with the flipping mirror of SLR designs in mind. Digicams like the top-end Kodaks, Nikons, Canons, and Fujis wouldn't be able to use existing still camera lenses.

Fringing and moiré from a mosaic filter are far more distracting on moving images than on still images, and are more noticeable on comparatively low-resolution video images to begin with.

Big, high-resolution chips are expensive, and three chips are much more expensive than a single chip. Consider the following Megapixel counts:

| 3-chip video, SDTV: | .27 - .45 | |

| 1-chip video, SDTV: | .34 - .68 | |

| 3-chip video, HDTV: | 1 - 2 | |

| 1-chip still cameras: | 1.5 - 3.3 (at least this week... [Summer 2000]) |

Consider that Panasonic's DVCPRO HD camcorder with 1 Megapixel chips costs $45,000, while the 2 Megapixel version costs $60,000. Bumping up each chip in that 3-chip camera from 1 to 2 Megapixels costs $5000. Given that still cameras are in a lower price range than "movie cameras" to begin with, yet have higher pixel counts, the price picture should be clear.

Size, cost, moiré visibility and flange back distance all contribute to the prevalence of one-chip designs in digital still cameras; three chip digicams have been made, but they are rare.

Indeed, as pixel densities increase, and the price of realtime digital signal processing comes down, might single-chip video cameras someday muscle out three-chippers at the high end? Why not? Stranger things have happened!

You are granted a nonexclusive right to reprint, link to, or frame this material for educational purposes,

as long as all authorship, ownership and copyright information is preserved and a link to this site is

retained. Copying this content to another website (instead of linking it) is expressly forbidden.

Last updated 2004.02.05 - updated URLs