Scanning a line of video is simple. Stacking those lines into fields and frames is more interesting...

Written June 2000. A version of this article appeared in DV Magazine, September 2000. © 2000-2004 Adam J. Wilt

You may not have realized it, but you've been watching 2:1 compressed video most of your life.

Early television system designers faced a fundamental tradeoff. They could either get a decent frame rate, or enough resolution for a "high definition" picture. The electronics of the day didn't have the bandwidth to handle high resolution, high frame rate pictures; it was one or the other.

In 1929, RCA applied for a patent in which a TV picture was broken into two interlaced fields, one with all the even scanlines, one with all the odd scanlines. Transmitting half the entire picture, then the other half, the displayed image can be refreshed twice as quickly as when the entire frame is transmitted in one chunk. This doubling of the refresh rate displays pictures rapidly enough to reduce flicker, while interlacing the two fields gives good resolution.

Starting with 343-line broadcasts in 1935, interlaced scanning has been used in every analog television standard (with the exception of a 525-line progressive system used in Japan, and some special-purpose medical and industrial systems). Interlace is a 2:1 compression system, halving the data rate needed for capturing, storing, and transmitting a picture, when compared with full-frame "progressive scan" television with the same number of scanlines. But like all compression, it has side effects. There ain't no such thing as a free lunch.

Interlaced Intricacies

NTSC television displays 486 scanlines of active picture content 30 times a second (for simplicity's sake, I'll gloss over the fact that it's really more like 29.97 times a second; that DV only records 480 lines; or that analog gear mostly uses 483 lines. I'll also stick to NTSC-compatible systems, though the same principles apply to PAL and SECAM, all analog and some digital HDTV systems, and so on.)

Each frame is comprised of two fields, each with 243 active scanlines. One field is displayed in 1/60 of a second, then the other follows 1/60 of a second later. Since the critical flicker fusion frequency of the human visual system is roughly 60 Hz, we can look at a TV and see what looks like a solid, steady picture, even though most of the time the CRT's faceplate is dark (LCDs, DLPs, and plasma panels work differently, but that's another article...). And because each interlaced field fills in the "missing" lines from the one before it, we get more vertical detail than a 243-line, non-interlaced picture would allow. It's a clever system.

However, we can't really get 486 lines of vertical detail. Recall that a "TV line" is a single black or white line ("Lookin' Sharp", DV, August 2000); to get 486 TV lines vertically requires black and white lines only a single scanline tall. You can build such a picture in any paint program; simply alternate rows of black and white pixels. If you send it out to video, though, what you'll get is a flickering, strobing nightmare: with one field of all-white scanlines and the other all black, the update rate of the white bits of the picture is only 1/30 second: you will see flicker! In its more usual manifestation, single-line details in otherwise-quiescent graphics will flicker at 1/30 second, an amusingly named but extremely annoying artifact called twitter.

In graphics, the cure for interlace twitter is to avoid single-pixel lines, or to "deflicker" the image by blurring it slightly in the vertical direction. The idea is to ensure that both fields contain roughly equal brightness energy; the original field might have one line of 100% brightness while the other field has its two adjacent scanlines at 30% - 50% brightness. As the fields are displayed, the single full-brightness line alternates with the two half-brightness lines; your eye integrates these over time and doesn't detect any flickering.

In deflickering, of course, you've lost some vertical detail. It turns out that the best tradeoff for most pix between resolution and twitter is around 0.7 times the active line count; for NTSC this means you can really only resolve about 340 lines or so vertically. The 0.7 number, which includes the effects of both interlace and discrete scanning lines, is called the Kell factor.

Cheap Trick

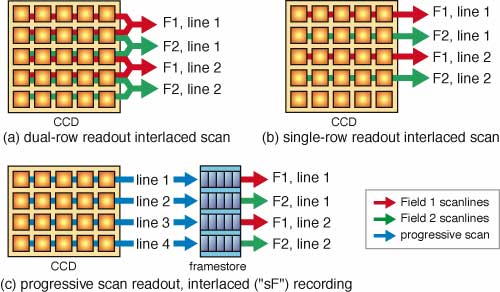

In tube cameras, twitter is avoided by shaping the scanning electron beam's landing spot so that it overlaps adjacent scanlines slightly. In CCD cameras, designers don't have that option; the size of a CCD cell is fixed. Instead, CCD cameras use a cheap trick: each scanline output from the chip is actually the sum of two adjacent scanlines or rows of pixels (figure 3a). For field one, a CCD's rows 1 and 2 are combined into scanline 1, rows 3 and 4 form scanline 2, 5 and 6 form scanline 3, and so on. For field two, rows 2 and 3 form the first scanline, 4 and 5 the second, etc. The imaging area of each resultant scanline overlaps its adjacent scanlines in the other field by half!

Scandalous! It's a wonder there's any vertical detail left! However, this methodology results in clean, quiescent pictures that look good on interlaced CRT displays. Furthermore it doubles the camera's sensitivity, since each resultant "pixel" is the summed output of two physical pixels. Since signal is doubled, yet random noise only increases by the square root of 2, the signal-to-noise ratio of the chip is improved, too.

Some higher-end cameras let you turn off or reduce the effects of dual-row scanning (by electronically shuttering the "extra" row), and output even fields that are comprised mostly or solely of even rows' pixels and odd fields made up of odd rows' pixels (figure 3b). Sony calls this EVS, Enhanced Vertical Sharpness. EVS pix are vertically sharper, but when viewed on an interlaced display the images may flicker and twitter a bit on any fine detail in the image.

Sony recommends EVS only for special purposes, not for general shooting. Aside from static twitter problems, vertical motion in the frame can cause fine detail to pop in and out of view (this is also a problem with CG rolls using finely detailed text; see http://www.adamwilt.com/Tidbits.html#CGs for more on this particular pathology).

That tears it

Interlaced pix are comprised of two fields captured at two different points in time. While this is right and proper for interlaced CRT display, it plays hob with any process that wants to deal with an entire frame. If you've ever viewed a still frame on your NLE or tried to extract a single frame as a still, you've seen interfield "edge combing" or "tearing" caused when objects moving in the scene are present in two different places in the two fields (figure 1). Spatial resampling or repositioning in DVE work is also problematic; many DVEs simply work on a field-by-field basis, at least for moving images.

Rate conversions are even more troublesome. Applying slo-mo to a clip often results in a characteristic bobble or bounce in fine vertical detail, as the slo-mo process (be it NLE software or a dynamic-tracking VTR's hardware) builds entire frames first from one field, then from the other. Standards conversion between NTSC and PAL is affected, and HDTV upconverters that change frame rates as well as image resolutions are vastly more complex than ones that stay within the same frame rate. Dealing with two spatially and temporally displaced fields per frame in a clean and transparent manner is well-nigh impossible; even the good systems still have visible defects in their pix, though with enough "vertical low-pass filtering" (blurring) the images are quite watchable.

Progressive Pix, Part 1

Progressive-scan, or "proscan", images aren't new; the earliest television systems were proscanned. Of course, they were mechanical system with whirling Nipkow disks, and resolved maybe 30 scanlines, and vertical scanlines at that. We've come a long way since then.

The big deal with progressive-scan image capture is this: the entire frame is grabbed at one time, instead of being captured as two separate fields. There is no temporal offset between the even and odd scanlines. Stills can be pulled from any frame without worrying about motion-induced combing, and image processing can use the full frame's inherent resolution for DVEs, repositions, and rescaling. Proscanned pix also work very nicely for computer-based presentations and for web video; modern computer displays are all proscanned devices.

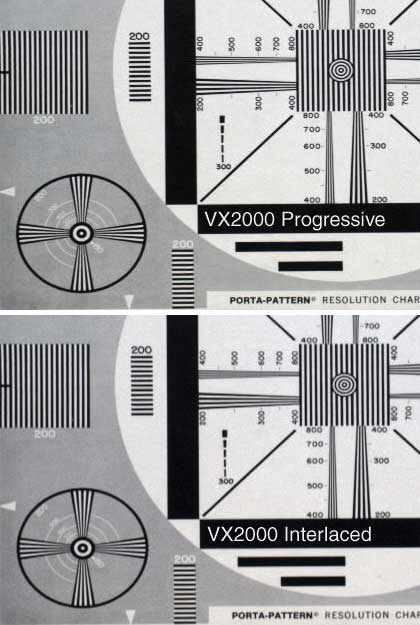

With proscanned CCDs, every row of pixels on the chip results in a video scanline; you can't perform dual-row readout. As far as image quality goes, it's as sharp as EVS, and sharper than the same image captured with dual-row interlaced readout (figure 2).

Proscanned cameras that work in an interlaced world capture the same amount of information as their interlaced brethren. Instead of grabbing 60 243-line fields in a second, they capture 30 486-line frames. The total data rate is the same, so these systems don't stress the technology. The images are scanned into a framestore and then written out to tape one field after the other, so aside from the fact that both fields were captured at the same time, the signal on tape is fully compatible with interlaced processing, transmission, and viewing equipment (figure 3c).

The 1080-line proscan HDTV formats, running at 24, 25, and 30 fps, also use this one-field-after-the-other recording method. In HD parlance, these are called 'segmented frame" systems, and you'll see the abbreviation sF attached to some of the product specs to indicate this.

Some of Canon's single-chip DV camcorders offer true progressive scan, as do some Sony camcorders, albeit at 15 fps. Both Canon and Sony suggest that proscan is best used for stills, since all the problems of viewing EVS footage (twitter, "popping" details, and the like) on interlaced displays are present with proscanned pix. The proscanned footage also has only half or one quarter of the temporal resolution of interlaced pix. Shooting at 15 or 30 fps has a very different "look"; it's not the same sort of fluid motion that 60 fps has.

Progressive Pix, Part 2?

Then there are DVCPRO-Progressive, ABC's HD format of 720/60p, and Canon's Frame Movie Mode, a scan method that's neither true progressive nor interlace. But I've run out of bandwidth, so in a crude form of 2:1 compression I'll have to discuss those next month.

You are granted a nonexclusive right to reprint, link to, or frame this material for educational purposes,

as long as all authorship, ownership and copyright information is preserved and a link to this site is

retained. Copying this content to another website (instead of linking it) is expressly forbidden.

Last updated 2004.01.24 - the 20th Anniversary of the Macintosh